Generative models have shown remarkable progress in 3D aspect. Recent works learn 3D representation explicitly under text-3D guidance. However, the limited scale of text-3D pair data restricts the generative diversity and text control of models. Generators may easily fall into a stereotype concept for certain text prompts, thus losing open-world generation ability.

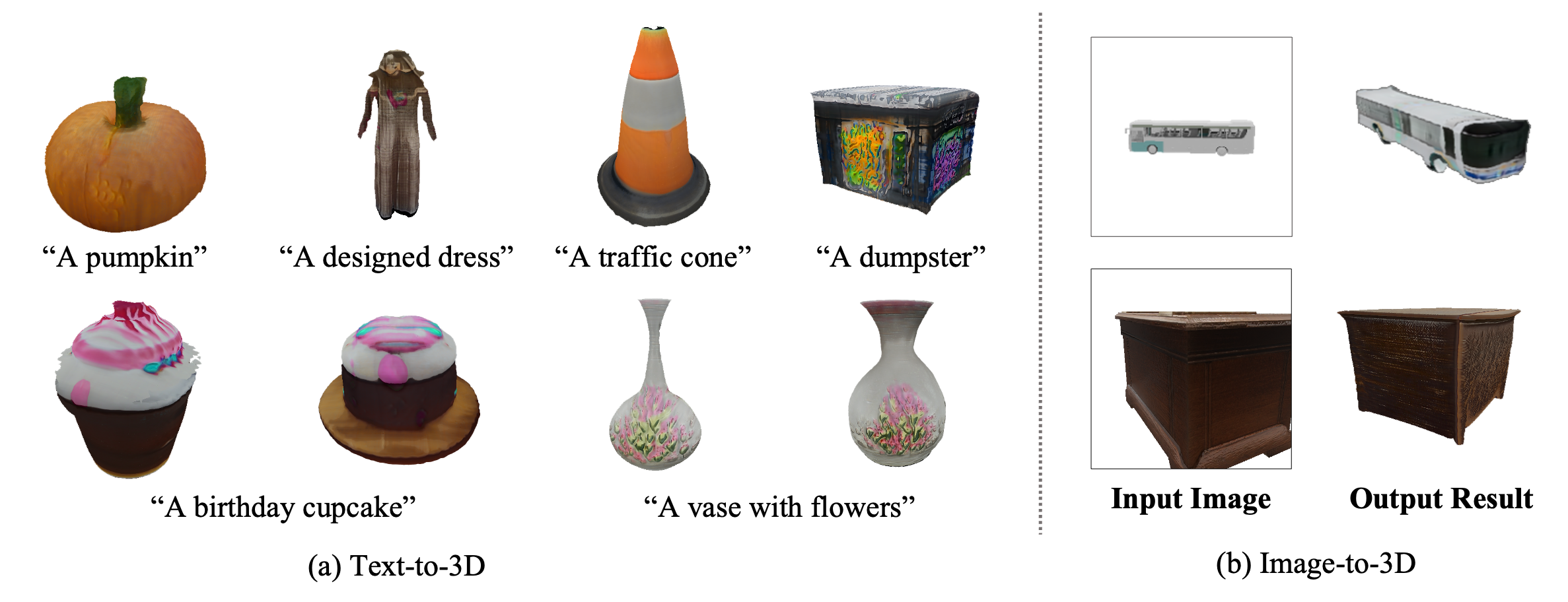

To tackle this issue, we introduce an open-world 3D generative model namely TextField3D. Specifically, rather than using the text prompts as input directly, we suggest to inject dynamic noise into the expressive fields of given text prompts, i.e., Noisy Text Fields (NTFs). In this way, limited 3D data can be mapped to potential textual latent space that is expanded by NTFs. Accordingly, a novel NTFGen module is proposed to model open-world text latent code in the noisy fields. Besides, we propose a novel NTFBind module to support image conditional 3D generation, which aligns view-invariant image latent code to the noisy fields. We then feed the obtained latent codes into a differentiable 3D representation, generating corresponding 3D products. To guide the conditional generation in both geometry and texture, multi-modal discrimination is constructed with a text-3D discriminator and a text-2.5D discriminator.

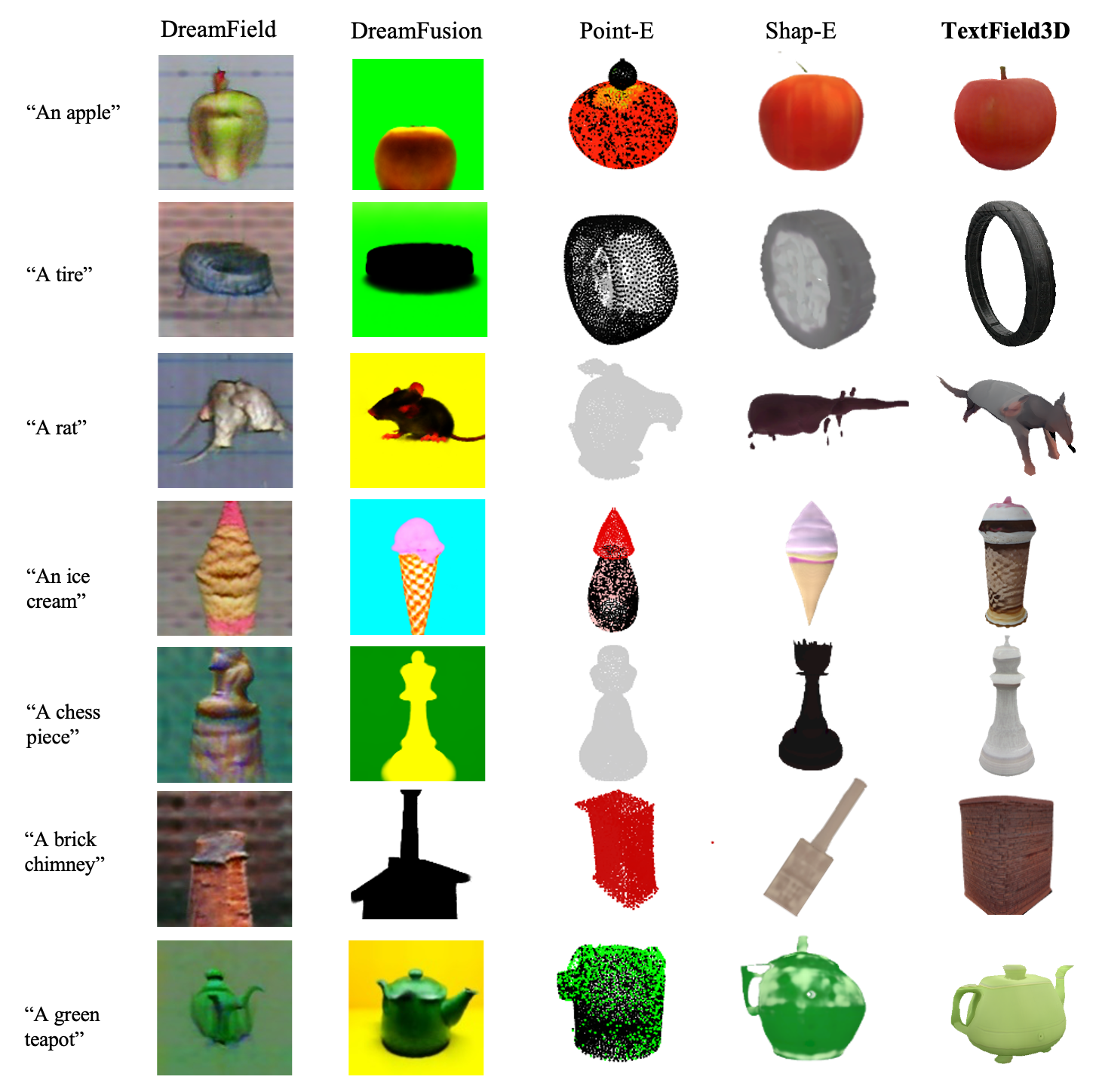

Compared to previous methods, TextField3D includes three merits: 1) generation diversity, 2) text consistency, and 3) low latency. Extensive experiments demonstrate that our method obtains an open-world 3D generation capability, in terms of generation diversity and text-control complexity.